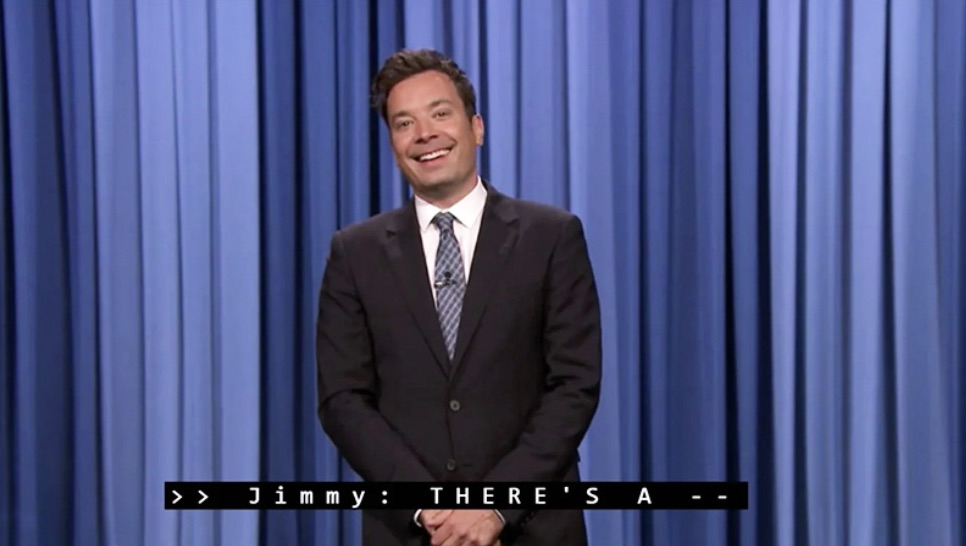

Captions

Captions are text versions of the spoken word presented within multimedia. Captions allow the content of web audio and video to be accessible to those who do not have access to audio. Though captioning is primarily intended for those who cannot hear the audio, it has also been found to help those that can hear audio content, those who may not be fluent in the language in which the audio is presented, those for whom the language spoken is not their primary language, etc.

Common web accessibility guidelines indicate that captions should be:

- Synchronized – the text content should appear at approximately the same time that audio would be available

- Equivalent – content provided in captions should be equivalent to that of the spoken word

- Accessible – caption content should be readily accessible and available to those who need it

On the web, synchronized, equivalent captions should be provided any time multimedia content (generally meaning both visual and auditory content) is present. This obviously pertains to the use of audio and video played through multimedia players and HTML5 video, but can also pertain to such technologies as Flash or Java when audio content is a part of the multimedia presentation.

Captions can be either closed or open. Closed captions can be turned on or off, whereas open captions are always visible.

All television sets with screen sizes of 13 inches and larger must contain the hardware to interpret and display closed captions. Closed captioning of most pre-recorded television programs is now a legal requirement in the United States. Television closed captioning is used by millions of individuals who are deaf or hard of hearing; millions more use it in the classroom or in noisy environments—like bars, restaurants, and airports. As the average age of the population increases, so does the number of people with hearing impairments. According to US government figures, one person in five has some functional hearing limitation. Because of the growing need for access to captions, many live broadcasts (such as news and sports events) and most pre-recorded programs now include closed captions that can be easily enabled and viewed on screen.

Closed captions for television are very limited in their formatting, because the caption look, feel, and location are determined by the caption decoder built into the television set. You can get more information about television captioning at Captioning FAQ.

Open captions are similar to, and include the same text, as closed captions, but the captions are a permanent part of the video picture, and cannot typically be turned off. Open captions are not decoded by the television set, but are a part of the video information. This typically requires a video editing or encoding program that allows you to overlay titles onto the video. The captions are visible to anybody viewing the video clip and cannot be turned off. This gives you total control over the way the captions appear, but can be very time consuming and expensive to produce. This technique allows for more control over caption location, size, color, font, and timing.

For web video, captions can be open, closed, or both. Closed captions are most common, utilizing functionality within video players and browsers to display closed captions on top of or immediately below the video area.

The most common forms of web multimedia – Flash and HTML5 Video – both support captioning. Older technologies, such a Windows Media Player, QuickTime, and RealPlayer also support captioning. The formats and techniques for authoring and implementing captions may vary based on the technology used.

If you have a video that is not closed captioned, please create an account or use an existing account at rev.com

Transcripts

Transcripts also provide an important part of making web multimedia content accessible. Transcripts allow anyone that cannot access content from web audio or video to read a text transcript instead. Transcripts do not have to be verbatim accounts of the spoken word in a video. They should contain additional descriptions, explanations, or comments that may be beneficial, such as indications of laughter or an explosion. Transcripts allow deaf/blind users to get content through the use of refreshable Braille and other devices. For most web video, both captions and a text transcript should be provided. For content that is audio only, a transcript will usually suffice.

Transcripts provide a textual version of the content that can be accessed by anyone. They also allow the content of your multimedia to be searchable, both by computers (such as search engines) and by end users. Screen reader users may also prefer the transcript over listening to the audio of the web multimedia. Most proficient screen reader users set their assistive technology to read at a rate much faster than most humans speak. This allows the screen reader user to access the transcript of the video and get the same content in less time than listening to the actual audio content.

In order to be fully accessible to the maximum number of users, web multimedia should include both synchronized captions AND a descriptive transcript.

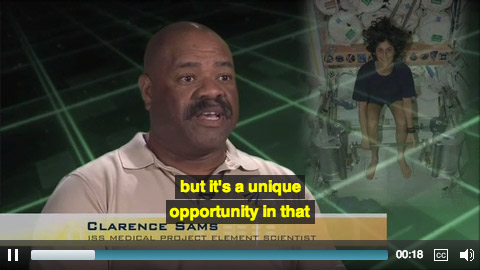

Audio Descriptions

Audio descriptions are intended for users with visual disabilities. They provide additional information about what is visible on the screen. This allows video content to be accessible to those with visual disabilities. Though not commonly utilized in television and movies, it is gaining in popularity. Audio descriptions are helpful on the web if visual content in web video provides important content not available through the audio alone. An example of audio descriptions for something you have probably seen and heard is found below. Can you visualize what is being described?

Listen to Audio Descriptions in MP3 Format (152KB)

If web video is produced with accessibility in mind, then audio descriptions are often unnecessary, as long as visual elements within the video are described in the audio.

Producing audio descriptions can be expensive and time-consuming. When producing a video for the web, the need for audio descriptions can often be avoided. If the video were displaying a list of five important items, the narrator might say, “As you can see, there are five important points.” In this case, audio descriptions would be necessary to provide the visual content to those with visual disabilities who cannot ‘see’ what the important points are. However, if the narrator says, “There are five important points. They are…” and then reads or describes each of the points, then the visual content is being conveyed through audio and there is no additional need for audio descriptions.

Real-time Captioning – The Real-time Dilemma

Web multimedia is being used more and more to deliver real-time, live content over the Internet – from video conferencing, to VoIP (Voice-over-Internet Protocol), to live video streaming. Accessibility standards require that equivalent alternatives be provided for audio and visual content. For real-time web multimedia, this means that visual content must be provided in an auditory form and that auditory content must be provided in a visual form. The equivalents must also be synchronized with the presentation, meaning that they must be delivered to the end user at the same time as the main content (e.g., captions for audio must display at the same time that the audio would be heard).

Audio description

The alternative to visual content in standard web media often takes the form of audio descriptions, where visual content that is not also provided in the audio stream of the multimedia is described by a narrator or other person. Audio descriptions are very difficult to incorporate into real-time web broadcasts. As an alternative, you can simply ensure that any visual content is natively described in the audio. For instance, if there is a person speaking on the video, they could audibly describe any additional visual content that is displayed in the movie, thus removing the need for a secondary form of audio description. This is the only feasible way to make live web broadcasts that include visual information accessible to individuals who are blind or have low vision. If produced with this in mind, and if those involved in the video broadcast are aware of and provide these descriptions, then the multimedia will be accessible to these audiences.

Captions

The alternative to auditory content in standard web media is usually synchronized captions. Captions provide a textual equivalent of all audible information. The difficulties in generating real-time captions are:

- Audio information must be converted into text in real time.

- The text captions must be delivered to the end user so they are synchronized with the audio.

Generating Real-time Text

Converting audio information into text in real time is difficult. Unfortunately, few typists can type fast enough to transcribe the spoken word. Thus, there are two primary technologies used to do this.

Stenography/Real-time transcription

Stenography involves having a trained transcriptionist (often called a stenographer or court reporter) that uses a special typewriter-like device called a steno machine to transcribe the spoken word to a text format in real-time. The steno machine has fewer keys (usually 22) than a typical keyboard. Rather than typing each letter, a stenographer hits key sequences on the steno machine to represent phonetic parts of words or phrases, or special codes representing words. Software then analyzes the phonetic information and forms words. Such technology allows a trained transcriptionist to generate text versions of audible conversation in real time.

Stenography allows the audible information to be converted to text in real time (well, perhaps a second or so after it is spoken). While accuracy levels are high, it is common to have words be incorrectly typed or interpreted by the steno software. Also, real-time transcription can be expensive, usually costing around $70-$120 USD per hour.

Voice recognition

While voice recognition offers great possibilities for real-time generation of captions, at this time, the technology is not yet at a level where it can be used to do so. In certain settings, such as when one person is speaking and is using voice recognition software that is well-trained, then voice recognition may be a viable option. Even in such settings, however, there are weaknesses, such as a lack of punctuation, poor accuracy, and inability for other speakers to be captioned.

While voice recognition technology is improving and promises future multi-user, highly accurate, speaker independent voice recognition, at this time, its feasibility in generating text for use in captions is isolated to few situations.

Delivery of Real-time Captions

As soon as the text equivalent of the audio has been generated, that text must be delivered to the end user so it is synchronized with the audio stream. Unfortunately, few real-time multimedia technologies have native support for captioning. Thus, the real-time captions must usually be delivered through a different technology running parallel to the multimedia software or hardware. This is often done through dedicated applications or through clients that are built into a web page and run in a web browser.

For video conferencing and voice chats, where the audio is delivered in real time, the captions must be generated, converted into a format for broadcast across the Internet, and then delivered to the end user – all in real time. For streaming video, there is often a delay between when the media is captured and when it displays to the end user, often due to encoding and buffering. In these cases, the delivery mechanism for the real-time captions must provide functionality for ensuring that the captions display at roughly the same time that the audio would be heard, even if the delay between caption generation and delivery is a long time.

Conclusion

While captioning real-time web multimedia is not always easy, it is possible and should always be done when real-time multimedia is being delivered. Fortunately, the technologies are improving to a level that allows real-time captioning to be both easy and financially viable in most situations.

The technologies used to provide real-time captions over the web are not limited to providing those captions as an alternative to web-based multimedia only. Such caption delivery systems can also be used to provide captions for non-web-based technologies such as radio, television, video conferencing, etc. This will ensure accessibility to all forms of live, real-time multimedia.